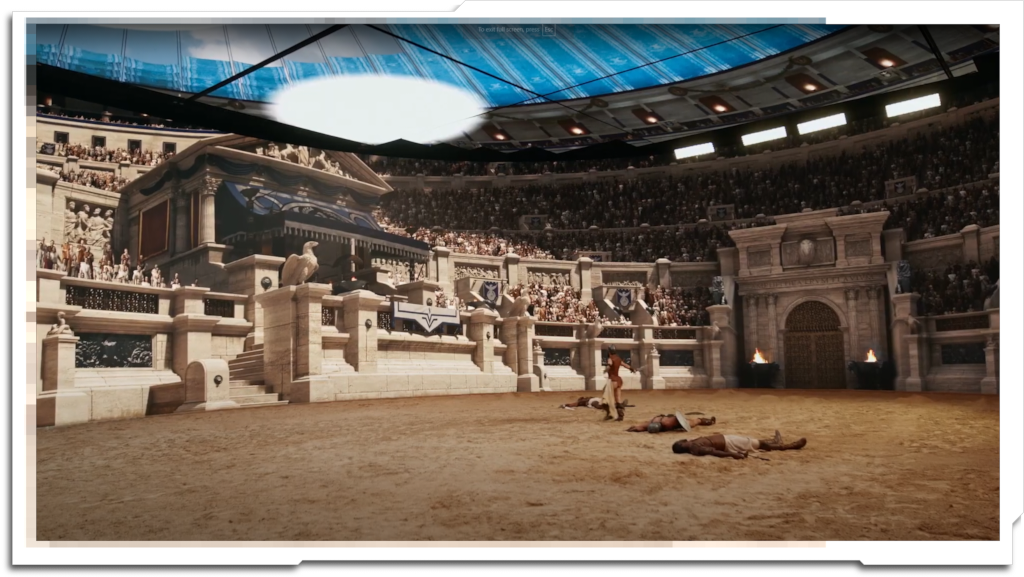

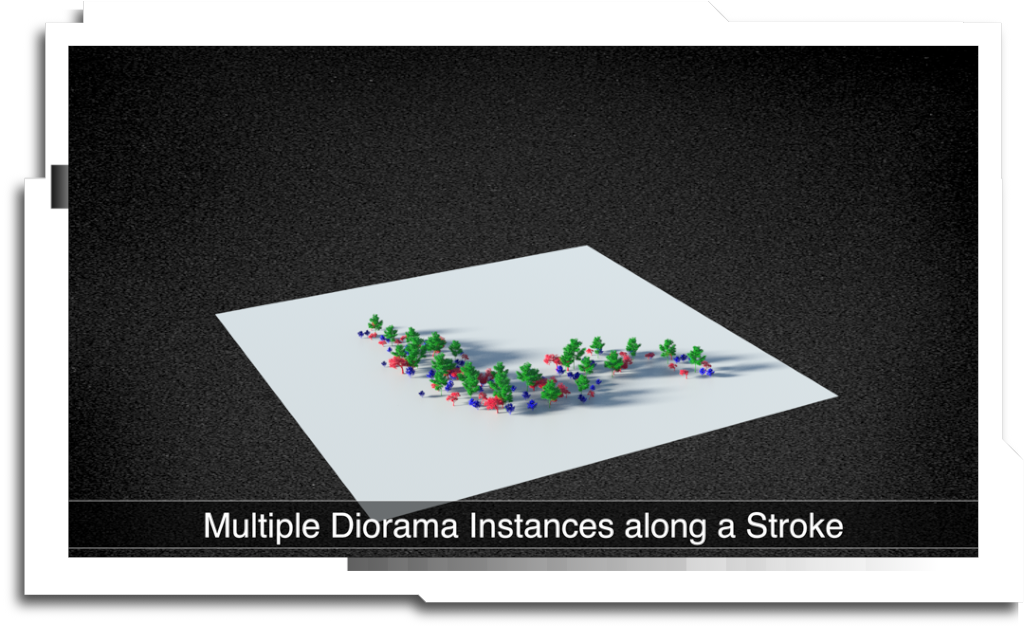

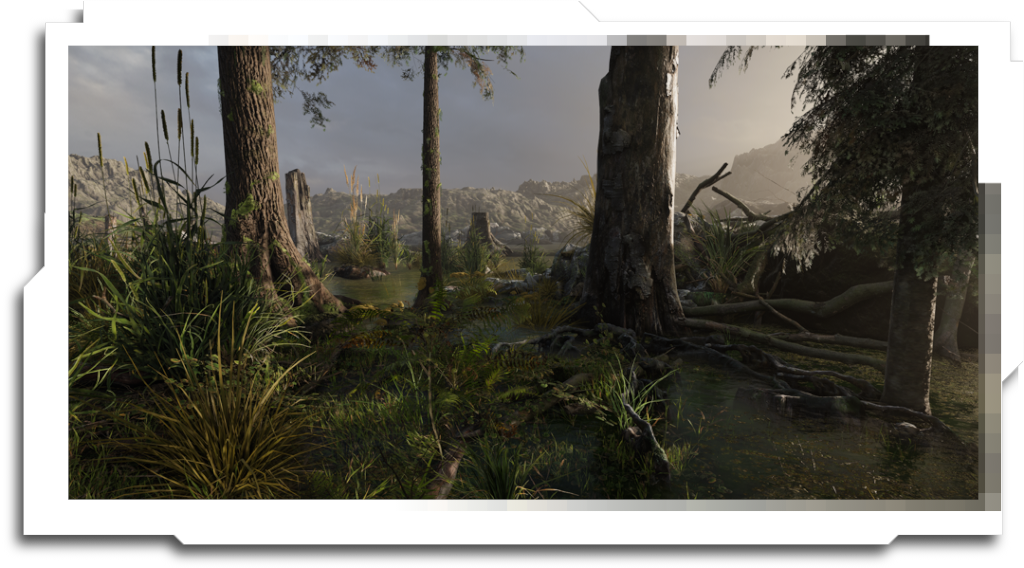

This page gathers together technical art tools, personal projects and development challenges that don’t really fit anywhere else. Older experiments in Unity and Unreal can be found on the ‘Realtime’ page, but I’ve decided to leave that page ‘as-is’, and separate the two by time.